In the era of artificial intelligence (AI) dominance, the spotlight often shines on the sophistication of algorithms and the rapid evolution of large language models (LLMs). However, there’s a less glamorous but arguably more foundational element behind every impressive AI demonstration — data. In fact, it’s no exaggeration to say, “no AI without data.” As powerful models continue to grow in size and impact, the quality and ethics of the data that trains them must be brought to the forefront. Before fine-tuning code or pushing GPU boundaries, organizations must first look inward to ask — are we collecting the right data, in the right way?

The Invisible Backbone of AI

Behind every intelligent response from an AI model lies an intricate network of labeled examples, vast datasets, and billions of tokens. These datasets serve as the knowledge base and behavioral template for machine learning. While headlines are dominated by AI model performance, little credit is given to the infrastructure that supports it — data collection, curation, and preprocessing.

Most AI models are only as good as the data that trains them. Garbage in, garbage out. Yet data’s role is often overshadowed by the allure of breakthrough architectures or benchmark scores. The unfortunate result? Researchers dedicate disproportionate energy to model optimization while overlooking how flawed, biased, or incomplete their underlying data may be.

Why Data Collection Is the Original Bottleneck

Before fine-tuning can come data labeling, and before data labeling must come data sourcing. What is sourced — and from where — profoundly impacts model behavior. If your dataset is skewed or overly reliant on unverified online texts, your model inherits those biases and inaccuracies, sometimes insidiously so.

Issues in data collection that need immediate attention include:

- Biased Sampling: Heavily Western and English-speaking data make for AI that poorly understands the rest of the world.

- Lack of Representation: Minority communities, non-digital native cultures, and niche industries often go unnoticed in training data.

- Outdated Information: Static datasets mean models become stale quickly, especially in fast-evolving domains like medicine or law.

- Ethics and Consent: Was the data collected with permission? Does it respect privacy norms?

The Myth of “Just Make a Bigger Dataset”

It’s tempting to assume that more data always leads to better AI. The history of deep learning certainly supports the notion that size matters — GPT-3 trained on hundreds of billions of tokens, for example. But increasing volume doesn’t correct for poor quality.

Big datasets introduce new problems if not carefully curated:

- Redundancy: Repetitive or duplicated data bloats training times without boosting accuracy.

- Noisy Content: Unverified web-scraped sources introduce harmful misinformation.

- Performance Ceiling: At a certain point, adding more similar data contributes diminishing returns, especially if the data lacks diversity.

The smarter approach is to improve the relevance and integrity of the data rather than doubling down on mass gathering. In short: fix the pipeline before scaling it.

How to Rethink Data Collection in the Age of AI

If we accept that datasets are the fuel to the AI engine, then it is incumbent on organizations to build systems that ensure that fuel is high-octane, clean, and ethically sourced. Here are some best practices currently reshaping AI development:

1. Build Feedback Loops with Users

Instead of relying solely on scraped content, engage end-users through prompts and feedback mechanisms. User-generated interactions help train more relevant, contextual, and ethical datasets.

2. Diversify Data Sources

Actively seek out underrepresented voices, languages, and platforms. Translating, verifying, and incorporating community-driven content can go a long way toward building inclusive AI.

3. Collaborate with Domain Experts

Some of the best data is hidden behind domain-specific barriers. Partnering with experts in law, medicine, engineering, and other fields ensures that modeled data reflects real-world nuances.

4. Document the Dataset Lifecycle

Initiatives like Datasheets for Datasets offer a structured way to document where data comes from, why it was collected, and what it was intended to do. This helps mitigate unintended misuse or bias during model training.

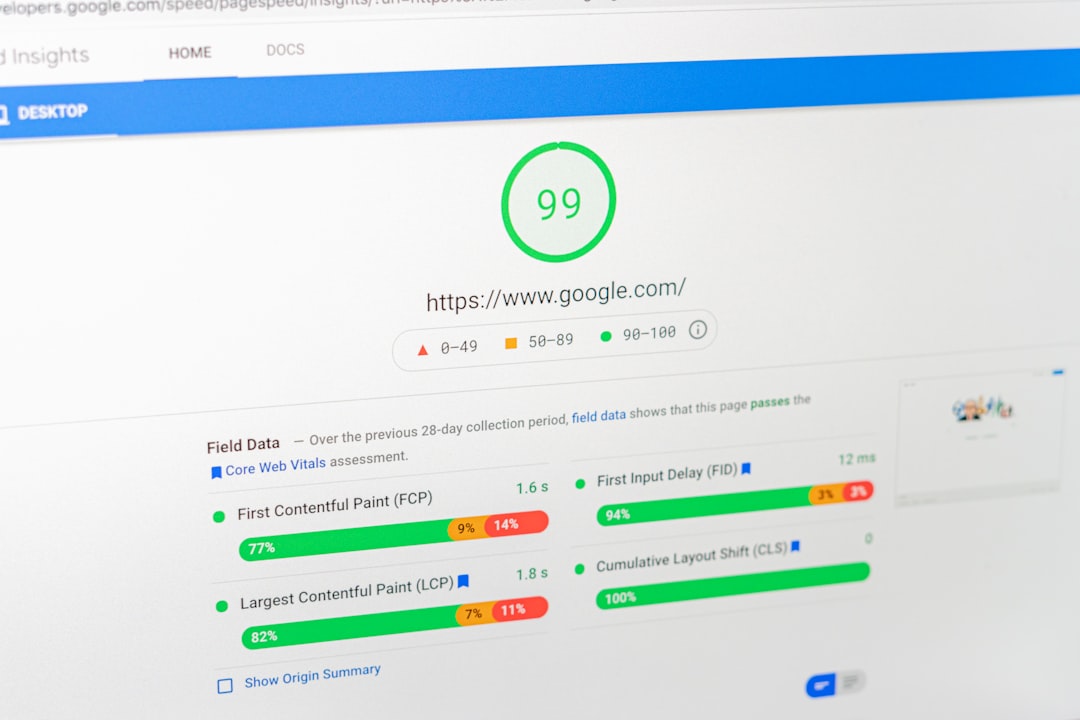

5. Validate with Real-World Outcomes

Rather than relying on synthetic benchmarks or academic tests alone, evaluate models with real economic, environmental, and ethical outcomes. That means creating feedback mechanisms not just for the code layer, but for the data layer.

The Ethical Imperative

As AI’s footprint expands into healthcare, finance, governance, and education, the stakes of good data become existential. A flawed training corpus for a chatbot may only result in misinformation. But a flawed dataset in predictive policing software can reinforce systemic injustice.

What’s worse, many of today’s most effective models are trained on datasets with opaque origins. As AI systems become increasingly central to decision-making, this lack of transparency poses significant legal and societal risks. Questions that were once philosophical are now regulatory:

- Who owns the training data?

- Was informed consent obtained?

- Can a model’s bias be traced back to specific data subsets?

Accountability in AI starts with accountability in data.

The Role of Open Datasets

Open-source initiatives like Common Crawl, The Pile, and OpenWebText have powered some of the most advanced AI systems today. But open datasets are not synonymous with open oversight. Many still lack proper documentation, and their reliance on scraped, unvetted data raises both quality and legal questions.

We’re reaching a tipping point where open data needs to evolve from being “freely available” to “responsibly constructed.” That includes:

- Establishing clearer norms for attribution and consent

- Creating funding structures for dataset stewardship

- Allowing for community governance of public AI datasets

The Future: Data as Infrastructure

Just as roads and power grids underpin modern economies, data infrastructure will be the invisible enabler of the AI economy. That vision requires more than better scrapers or larger corpora. It requires a decentralized but principled approach where data collection is:

- Transparent: Stakeholders can trace where and how data was gathered.

- Respectful: Privacy and ethics are not afterthoughts, but core tenets.

- Dynamic: Datasets are treated as living entities that must evolve with time.

In that world, data scientists are not just technicians — they are stewards. And model developers begin their process not with a technical spec, but with a critical question: *What story is the data telling? And who gets to tell it?*

Conclusion

AI’s capabilities will continue to astound us, but its integrity will depend on something far less visible and far more fundamental — the data we feed it. It’s time we shifted our focus from the fascination of models to the foundation they rest upon.

Before we strive for artificial intelligence, we must commit to authentic data. Fix the datasets, fix the models. That’s where real transformation begins.